Reliable Vision-Based Grasping Target Recognition for Upper-limb Prostheses

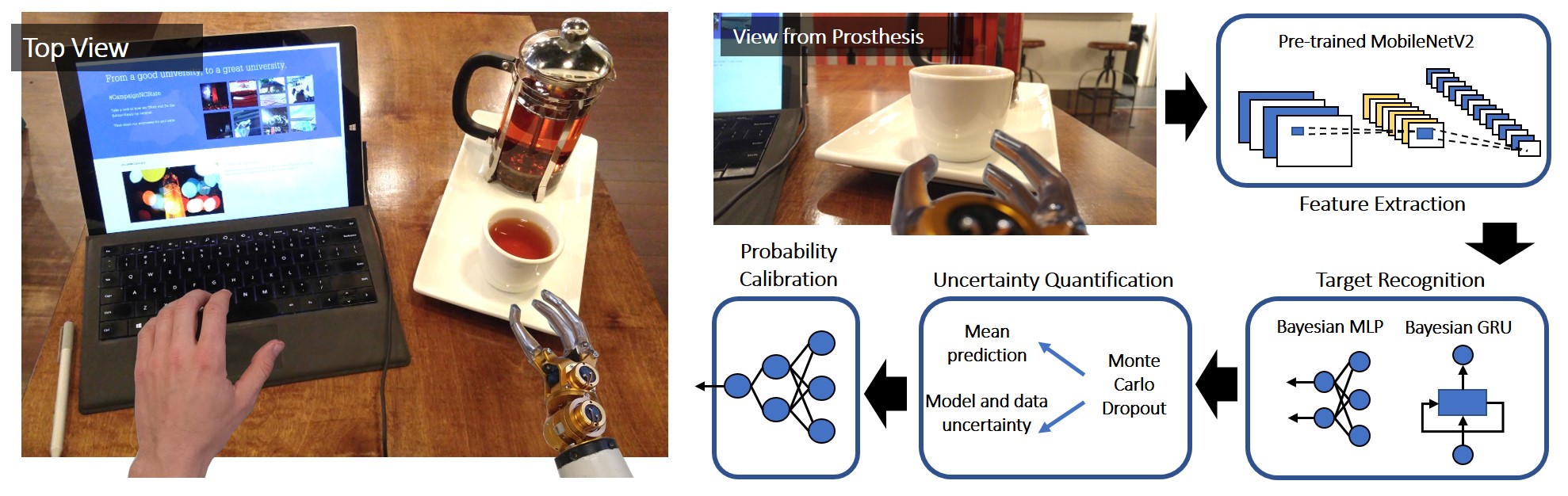

Computer vision has shown promising potential in wearable robotics applications (e.g. human grasping target prediction and context understanding). However, in practice, the performance of computer vision algorithms is challenged by insufficient or biased training, observation noise, cluttered background, etc. By leveraging Bayesian Deep Learning (BDL), we have developed a novel, reliable vision-based framework to assist upper-limb prosthesis grasping during arm reaching. This framework can measure different types of uncertainties from the model and data for grasping target recognition in realistic and challenging scenarios. A probability calibration network was developed to fuse the uncertainty measures into one calibrated probability for online decision-making. We formulated the problem as the prediction of grasping target while arm reaching. Specifically, we developed a 3D simulation platform to simulate and analyze the performance of vision algorithms under several common challenging scenarios in practice. In addition, we integrated our approach into a shared control framework of a prosthetic arm and demonstrated its potential at assisting human participants with fluent target reaching and grasping tasks.

Resources:

This work was a collaboration between the ARoS Lab and the Neuromuscular Rehabilitation Engineering Laboratory (NREL) at UNC Chapel Hill / NC State. This work was supported by the National Science Foundation (NSF) under Award CNS-1552828, NRI-1527202, DOD W81XWH-15-C-0125 and W81XWH-15-1-0407.