Code: https://github.com/iVMCL/LostGANs

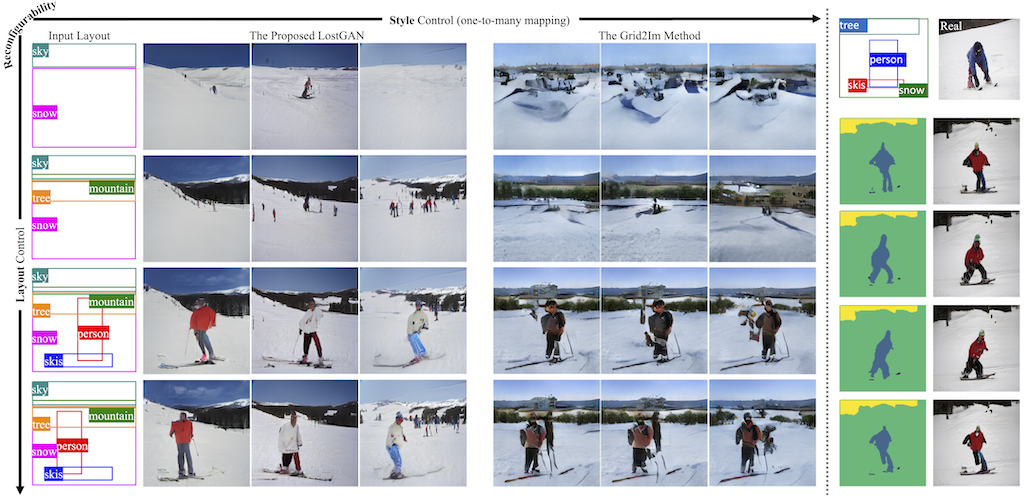

Illustration of controllable image synthesis from reconfigurable spatial layouts and style codes in the COCO-Stuff dataset at the resolution of 256x 256.

On the Left Panel: The proposed method is compared with the prior art, the Grid2Im method. Each row shows effects of style control, in which three synthesized images are shown using the same input layout on the left by randomly sampling three style latent codes. Each column shows effects of layout control in terms of consecutively adding new objects (the first three) or perturbing an object bounding box (the last one), while retaining the style codes of existing objects unchanged.

Advantages of the proposed method: Compared to the Grid2Im method, (i) the proposed method can generate more diverse images with respect to style control (\eg, the appearance of snow, and the pose and appearance of person). (ii) The proposed method also shows stronger controllability in retaining the style between consecutive spatial layouts. For example, in the second row, the snow region is not significantly affected by the newly added mountain and tree regions. Our method can retain the style of snow very similar, while the Grid2Im seems to fail to control. Similarly, between the last two rows, our method can produce more structural variations for the person while retaining similar appearance. Models are trained in the COCO-Stuff dataset and synthesized images are generated at a resolution of 256×256 for both methods. Note that the Grid2Im method utilizes ground-truth masks in training, while the proposed method is trained without using ground-truth masks, and thus more flexible and applicable in other datasets that do not have mask annotations such as the Visual Genome dataset.

On the Right Panel: Illustration of the fine-grained control at the object instance level. For an input layout and its real image in the first row, four synthesized masks and images are shown. Compared with the 2nd row, the remaining three rows show synthesized masks and images by only changing the latent code for the Person bounding box. This shows that the proposed method is capable of disentangling object instance generation in an synthesized image at both the layout-to-mask level and the mask-to-image level, while maintaining a consistent layout in the reconfiguration. Please see text for details.